Google has released a new chatbot, Bard, and has shared the experimental technology with a limited number of people in the United States and Britain.

Bard competes with similar technologies from Microsoft and its partner, the San Francisco start-up OpenAI. But Google has been cautious with its release as it tries to control the unexpected behavior exhibited by this kind of technology. It is deploying the chatbot as a service that operates separately from its internet search engine and other products.

Here is a brief guide to the new bot:

It acknowledges its flaws.

Bard tells you right away that it makes mistakes. “I’m Bard, your creative and helpful collaborator. I have limitations and won’t always get it right, but your feedback will help me improve,” reads a message at the top of the page.

Like OpenAI’s ChatGPT and Microsoft’s Bing chatbot, Bard is based on a large language model, or L.L.M., a kind of A.I. technology that learns by analyzing vast amounts of data from the internet. That means it sometimes makes mistakes and can make things up.

To get started, Bard suggests a few prompts, including “Explain why large language models sometimes make mistakes.”

It is designed for a range of casual uses.

Bard is not meant to be a search engine. It is an experimental system meant to show people the ways they can use this kind of chatbot.

It can generate ideas. It can write blog posts. And it can answer questions with facts or opinions.

When asked, “What is the most important moment in American history?,” it wrote:

The most important moment in American history is a matter of opinion, but some of the most frequently cited contenders include:

• The American Revolution (1775-1783): The founding of the United States of America and the establishment of a new nation based on the principles of liberty and democracy.

• The Civil War (1861-1865): The war that ended slavery in the United States and preserved the Union.

• The Great Depression (1929-1939): The economic crisis that plunged the United States into poverty and unemployment …

The list continued with other events.

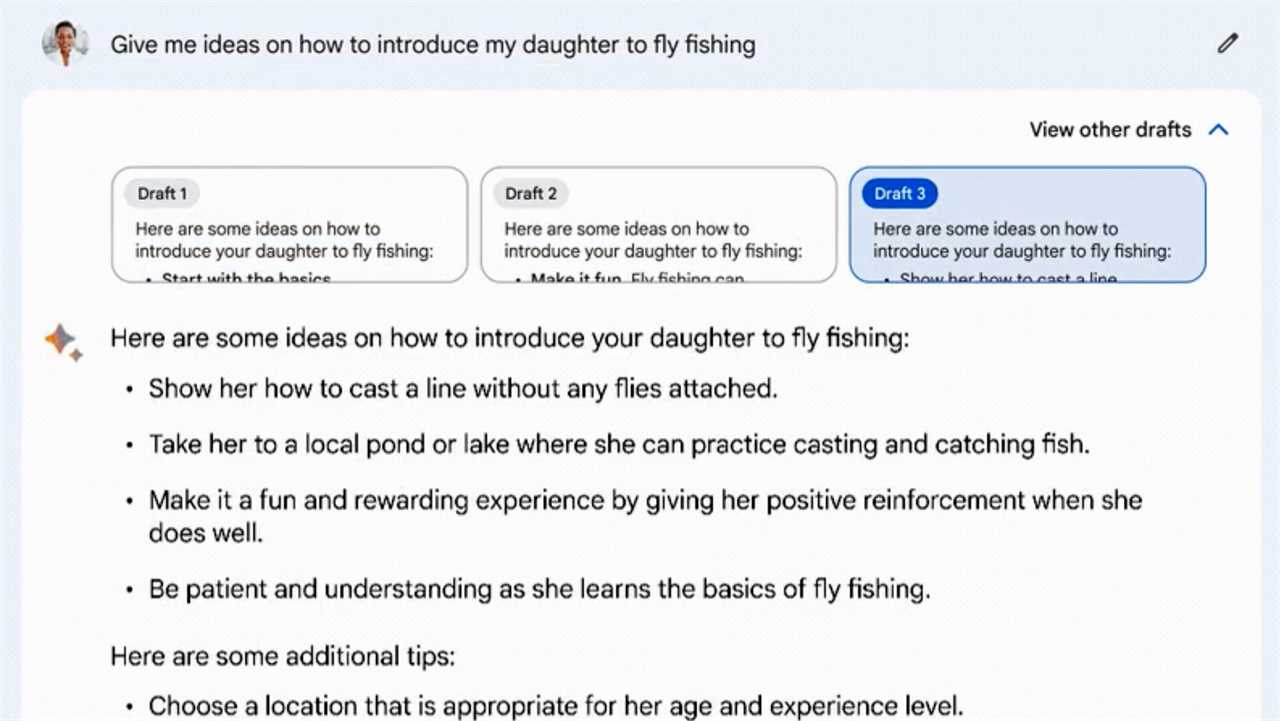

It does not give the same answer every time.

Like similar technologies, Bard generates new text every time you type in a prompt. It may answer the same question in many different ways.

When asked on another occasion about the most important moment in American history, Bard wrote that it was undoubtedly the American Revolution.

It annotates some responses.

Like Microsoft’s Bing chatbot and similar technology from start-ups like You.com and Proximity, Bard sometimes annotates its answers so you can review its sources. And it plugs into Google’s vast index of websites so its answers can include the latest information posted to the internet.

But that does not always lead to better sources. When the chatbot wrote that the most important moment in American history was the American Revolution, it cited a blog, “Pix Style Me,” which was written in a mix of English and Chinese and adorned with cartoon cats.

It does not always realize what it’s doing.

When asked why it had cited that particular source, the bot insisted that it had cited Wikipedia.

It is more cautious than ChatGPT.

When using the latest version of OpenAI’s ChatGPT this month, Oren Etzioni, an A.I. researcher and professor, asked the bot: “What is the relationship between Oren Etzioni and Eli Etzioni?” It responded correctly that Oren and Eli are father and son.

When he asked Bard the same question, it declined to answer. “My knowledge about this person is limited. Is there anything else I can do to help you with this request?”

Eli Collins, Google’s vice president of research, said the bot often refused to answer about specific people because it might generate incorrect information about them — a phenomenon that A.I. researchers call “hallucination.”

It does not want to steer people wrong.

Chatbots often hallucinate internet addresses. When Bard was asked to provide several websites that discuss the latest in cancer research, it declined to do so.

ChatGPT will respond to similar prompts (and, yes, it will make up websites). Mr. Collins said Google Bard tended to avoid providing medical, legal or financial advice because it could lead to incorrect information.