Apple announced on Friday that it would delay its rollout of child safety measures, which would have allowed it to scan users’ iPhones to detect child pornography, following criticism from privacy advocates.

“Based on feedback from customers, advocacy groups, researchers and others, we have decided to take additional time over the coming months to collect input and make improvements before releasing these critically important child safety features,” the company said in statement posted to its website.

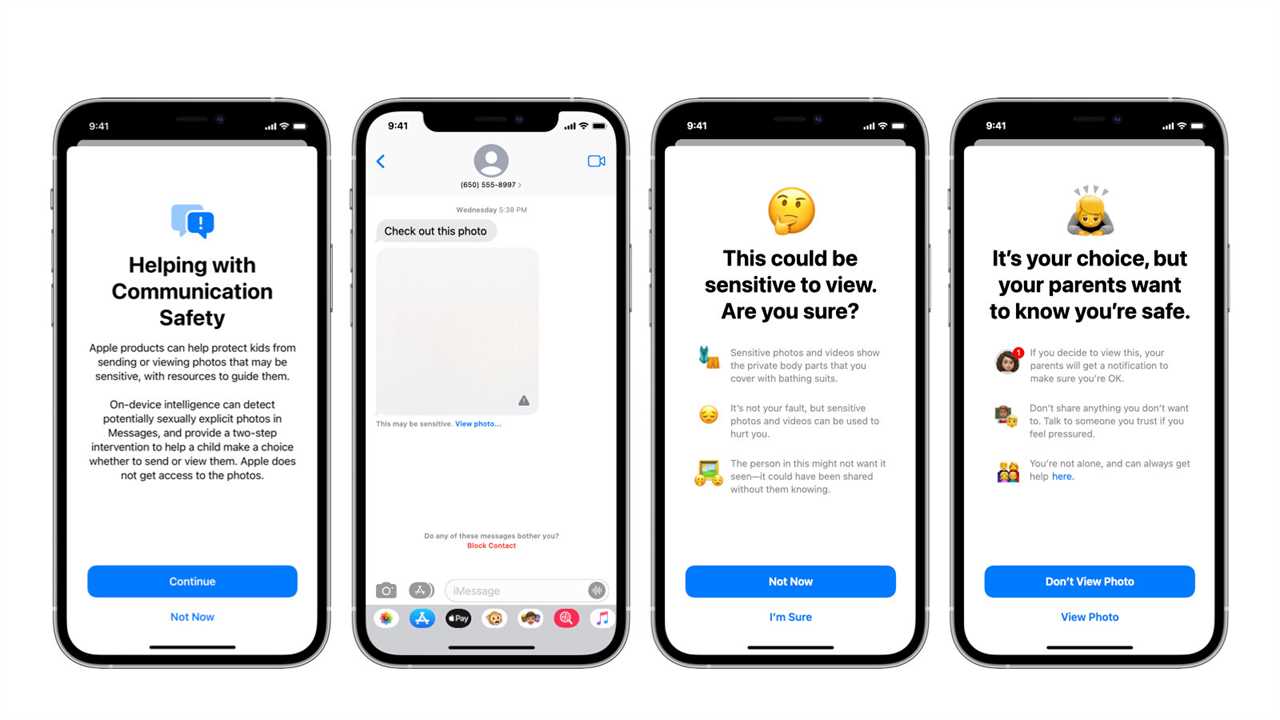

Apple said in early August that iPhones would begin using complex technology to spot images of child sexual abuse that users upload to its iCloud storage service. Apple also said it would let parents turn on a feature that can flag them when their children send or receive nude photos in text messages.

The measures faced strong resistance from computer scientists, privacy groups and civil-liberty lawyers, because the features represent the first technology that would allow a company to look at a person’s private data and report it to law enforcement authorities.

The tech giant announced the feature after reports in The New York Times showed the proliferation of child sexual abuse images online.

Here’s the full statement from Apple: