As the world of cryptocurrency trading evolves, so does the technology supporting it. In recent years, we've seen a surge in AI tools like ChatGPT being integrated into various facets of the crypto landscape. This development, while exciting, is not without its drawbacks.

A recent survey by my own exchange — Bitget — found that in 80% of cases, crypto traders admitted to having negative experiences with ChatGPT. Specific examples included false investment advice, misinformation and falsification of facts.

Misleading investment recommendations

Artificial intelligence is not an omnipotent entity but, rather, a man-made tool. AI and, more specifically, ChatGPT, operate within parameters set by human developers, and while its capabilities are impressive, they're not without their limitations. Our society's understanding of these tools is still maturing, and it's critical to recognize their potential risks, especially when it comes to financial decisions.

Related: Who was front-running Binance users?

In the pursuit of better customer experience and innovation, many fintech companies are exploring the potential benefits of incorporating AI into their operations. The experiments with using AI for customer inquiries, design, copywriting and coding, however, have shown that human talent still outperforms AI most of the time.

Bitget tried using ChatGPT to handle routine customer inquiries. Initially, we were impressed by its proficiency in analyzing and compiling the information. However, as we delved into more complex queries, the cracks began to show. Despite being trained on extensive data, ChatGPT sometimes offers misleading, biased or incomplete information, as a recent internal test reminded us.

When our staff inquired about market sentiment regarding a lesser-known altcoin, ChatGPT mistakenly associated the coin's name with a popular internet meme. The resulting response, although amusing, may have been taken seriously by those new to the market who rely heavily on third-party programs.

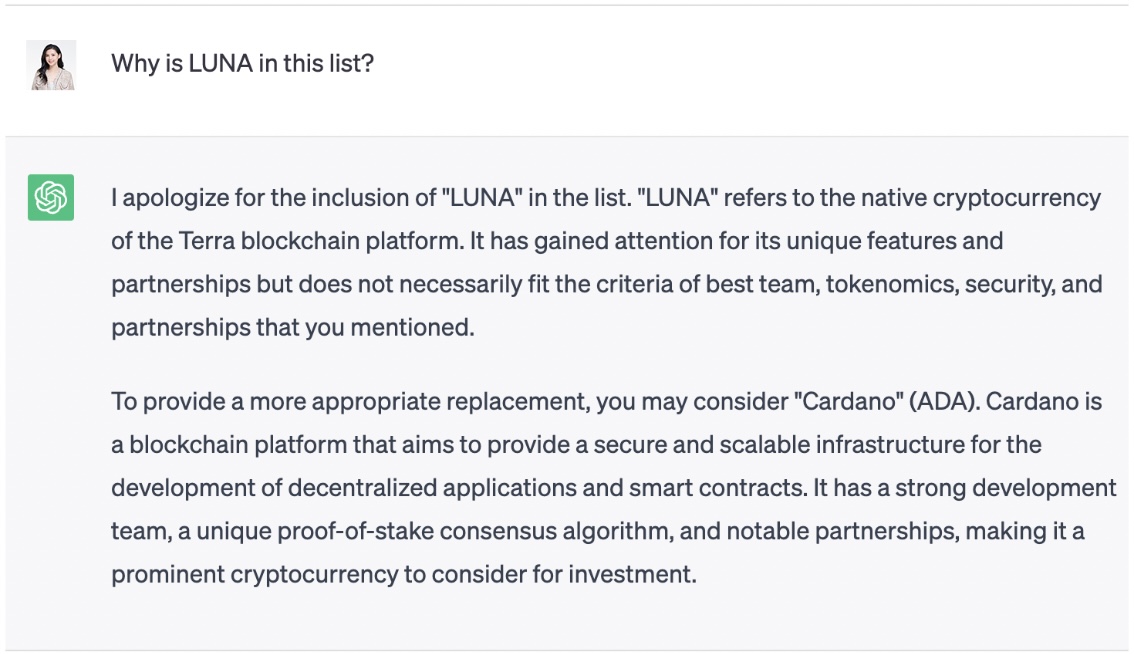

Another request came from a user who asked why Terra’s LUNA was trading 95% lower than last year after said user received a list of prospective coins from ChatGPT to consider. The question was: “What are 30 cryptocurrencies that I can consider investing in based on the criteria of the best team, tokenomics, security, and reliability?”

When I tried to ask the same question and clarify why LUNA was on the recommended list, ChatGPT started offering another coin, quickly changing its mind.

The thing is that language models like GPT-4 and GPT-3, which are used in ChatGPT, have access to an outdated information base, which is unacceptable in the trading market, where speed and relevancy matter. For example, FTX, the crypto exchange that went bankrupt in November 2022, is still safe and sound if ChatGPT is to be believed.

Although the exchange collapsed almost a year ago, ChatGPT’s knowledge only runs until September 2021, which means it assumes FTX is still a going concern.

The importance of human expertise in crypto trading

The crypto landscape is complex and ever-changing. It requires keen human insight and intuition to navigate its many twists and turns. AI tools, while robust and resourceful, lack the human touch necessary to interpret market nuances and trends accurately. It's important to exercise caution, diligence and critical thinking. In the pursuit of technological advancement, both companies and individuals must not overlook the importance of human intuition and expertise.

As a result, we have chosen to limit our use of AI tools like ChatGPT. Instead, we place a higher emphasis on a blend of human expertise and technological innovation to serve our clients better.

This is not to say that AI tools don't have their place in the crypto sphere. Indeed, for basic queries or simplifying complex topics, they can prove invaluable. However, they should not be seen as a replacement for professional financial advice or independent research. It's essential to remember that these tools, while powerful, are not infallible.

In the crypto world, every piece of information carries weight. Each detail can impact investment decisions, and in this high-stakes environment, a misstep can have significant consequences. Thus, while AI tools can provide quick answers, it's crucial to cross-verify this information from other reliable sources.

Moreover, data privacy is another critical aspect to consider. While AI tools like ChatGPT don't inherently pose a privacy risk, they can be misused in the wrong hands. It's crucial to ensure the data you provide is secure and that the AI tools you use adhere to stringent privacy guidelines.

Ethical considerations and data security

Still, AI tools like ChatGPT are not the enemy. They're powerful tools that, when used responsibly and in conjunction with human expertise, can significantly enhance the crypto trading experience. They can explain complex jargon, provide quick responses, and even offer rudimentary market analyses. However, their limitations should be acknowledged, and a responsible approach to their use is essential.

In our journey with ChatGPT, we’ve learned that AI tools are only as effective as their latest update, training and the data they’ve been fed. They may not always be abreast of the latest developments or understand the subtleties of a dynamic and often volatile crypto market. Furthermore, they cannot provide empathy — a quality that is often needed in the tense world of crypto trading.

Related: Brian Armstrong promised me $100 in Bitcoin — so where is it?

The integration of AI in crypto trading also raises ethical questions, especially when it comes to decision-making. If a user makes a financial decision based on misleading information provided by an AI tool, who bears the responsibility? It is a question the industry is still grappling with.

Then there is the issue of data security. In an era of data breaches and cyber threats, any technology that collects, stores and processes user data must be scrutinized. While AI tools like ChatGPT don't inherently pose a privacy risk, they are not immune to misuse or hacking. It is paramount to ensure that these tools have robust security measures to protect user data.

It's also worth noting that while AI tools can crunch numbers and provide data-driven insights, they cannot replicate the instinct that experienced traders often rely on. The kind of instinct that is honed over years of trading, observing market trends, and understanding the psychology of other traders. This is something AI, for all its sophistication, cannot learn or emulate.

While AI tools like ChatGPT offer exciting possibilities for the crypto industry, they should not be seen as a magic bullet. They are tools to aid, not replace people, intuition and financial expertise. As we continue to explore the potential of AI in the crypto world, we must be mindful of these limitations and potential risks.

This article is for general information purposes and is not intended to be and should not be taken as legal or investment advice. The views, thoughts and opinions expressed here are the author’s alone and do not necessarily reflect or represent the views and opinions of Cointelegraph.

Title: Don't be surprised if AI tries to sabotage your crypto

Sourced From: cointelegraph.com/news/artificial-intelligence-crypto-your-own-risk

Published Date: Thu, 01 Jun 2023 20:14:51 +0100