Deepfakes of political leaders pose a terrifying threat

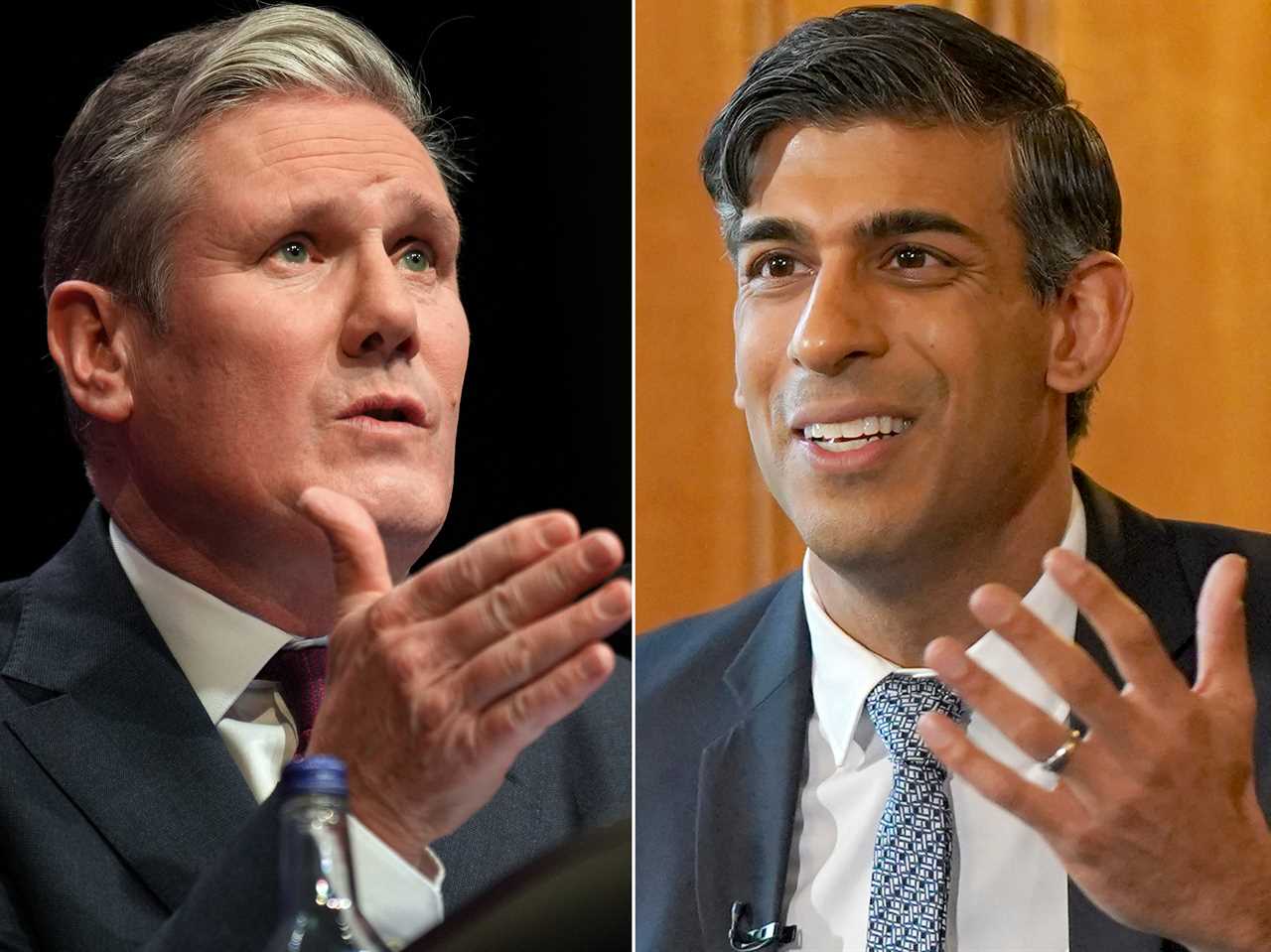

A chilling warning has been issued regarding the use of AI deepfakes to manipulate the upcoming UK election. Research has revealed that software capable of cloning the voices of prominent figures like Rishi Sunak and Keir Starmer poses a significant danger.

Concerns raised over safety measures of popular AI voice cloning tools

Experts tested six popular AI voice cloning tools and found that convincing deepfakes of political leaders were created 80% of the time, with no safety measures in place to prevent the spread of misinformation during the election period.

Call for tech companies to introduce safeguards

The Center for Countering Digital Hate is urging tech companies to implement measures to prevent the creation and dissemination of fake audio clips. With fears of malicious interference in the upcoming election, there is a growing need for safeguards against AI-generated disinformation.

Risk of AI technology being weaponized by bad actors

Imran Ahmed, CEO of the Center for Countering Digital Hate, has warned that AI voice-cloning technology could be exploited by malicious actors to mislead voters and undermine the democratic process. Urgent action is needed to address the threat of AI election disinformation before it escalates.